Introduction to Edge Computing

Edge computing represents a paradigm shift in how data is processed, stored, and analyzed in today’s hyper-connected world. At its core, edge computing is a distributed computing framework that brings enterprise applications closer to data sources, such as Internet of Things (IoT) devices or local edge servers. This proximity allows for faster insights, improved response times, and better bandwidth availability. Unlike traditional cloud computing, where data travels to centralized data centers for processing, edge computing handles data at or near the point of generation, minimizing latency and reducing the need for constant data transmission over networks.

The rise of edge computing is driven by the explosive growth of data and connected devices. In 2024, the total amount of data created and consumed globally reached 149 zettabytes, projected to surge to 394 zettabytes by 2028. This data deluge, fueled by IoT, 5G networks, and AI applications, has exposed the limitations of centralized systems, including high latency, bandwidth constraints, and increased costs. Edge computing addresses these by decentralizing processing, enabling real-time decision-making essential for applications like autonomous vehicles, smart cities, and industrial automation.

In 2025, edge computing is no longer a niche technology but a cornerstone of digital infrastructure. Gartner predicts that by this year, 75% of enterprise-generated data will be processed at the edge, up from just 10% in 2018. This shift is transforming industries by enhancing efficiency, security, and innovation. As we explore this topic, we’ll cover its history, mechanics, architectures, benefits, challenges, applications, current statistics, and future trends.

History of Edge Computing

The concept of edge computing has roots in the evolution of computing paradigms, tracing back to the late 1990s. It emerged as a response to the limitations of centralized computing, building on content delivery networks (CDNs) introduced by companies like Akamai to distribute web content closer to users and reduce latency. Early forms focused on caching data at network edges to improve website performance, but the true catalyst was the proliferation of IoT devices in the early 2010s.

In the 2000s, as cloud computing gained traction with services like Amazon Web Services (launched in 2006), the need for faster processing became apparent. Centralized clouds excelled in scalability but struggled with real-time applications due to data travel distances. By 2014, the term “edge computing” gained prominence through initiatives like the Open Edge Computing consortium, which aimed to standardize processing at the network periphery.

The 2010s saw rapid advancements driven by 5G rollout and AI integration. In 2018, only 10% of enterprise data was processed at the edge, but predictions from Gartner highlighted a dramatic increase. Major players like IBM, Microsoft, and Google invested heavily, launching edge-specific platforms. The COVID-19 pandemic accelerated adoption, as remote work and telemedicine demanded low-latency solutions.

By 2025, edge computing has matured into a multi-billion-dollar market, with hardware, software, and services integrating seamlessly with hybrid cloud models. Its history reflects a journey from supplementary caching to essential infrastructure, shaped by technological convergence and data explosion.

How Edge Computing Works

Edge computing operates by shifting data processing from remote central servers to locations closer to the data source. The process begins with data generation from edge devices like sensors, cameras, or smartphones. Instead of sending raw data to a distant cloud, local edge servers or gateways perform initial filtering, analysis, and decision-making. Only essential, aggregated data is transmitted to the cloud for long-term storage or complex computations.

Key to this is the network layer, which connects devices using technologies like 5G, Wi-Fi, or satellite for efficient data flow. For example, in an autonomous vehicle, onboard sensors generate 1 GB of data per second; edge processing analyzes this in real-time for immediate actions like braking, while summaries are sent to the cloud. This reduces latency to under 5 milliseconds, compared to 20-40 milliseconds in traditional cloud setups.

The workflow includes enrollment of devices, local computation using AI models, and synchronization with central systems. Security measures, such as encryption and liveness detection equivalents for data integrity, ensure safe operations.

Types of Edge Computing

Edge computing manifests in various forms, each tailored to specific needs.

Device Edge

This involves processing directly on the end device, like a smartwatch analyzing heart rate data locally. It’s ideal for basic events where minimal computation is needed, reducing dependency on networks.

Sensor Edge

Sensors in industrial settings process data on-site, filtering noise before transmission. This type excels in environments with high data volumes, like manufacturing floors.

Mobile Edge

Leveraged in 5G networks, mobile edge computing (MEC) places servers at base stations for ultra-low latency, supporting applications like AR/VR.

Branch Edge

For enterprises, this deploys edge servers in local branches, such as retail stores, for real-time inventory management.

Each type balances processing power, latency, and cost, often combined in hybrid setups.

Architecture of Edge Computing

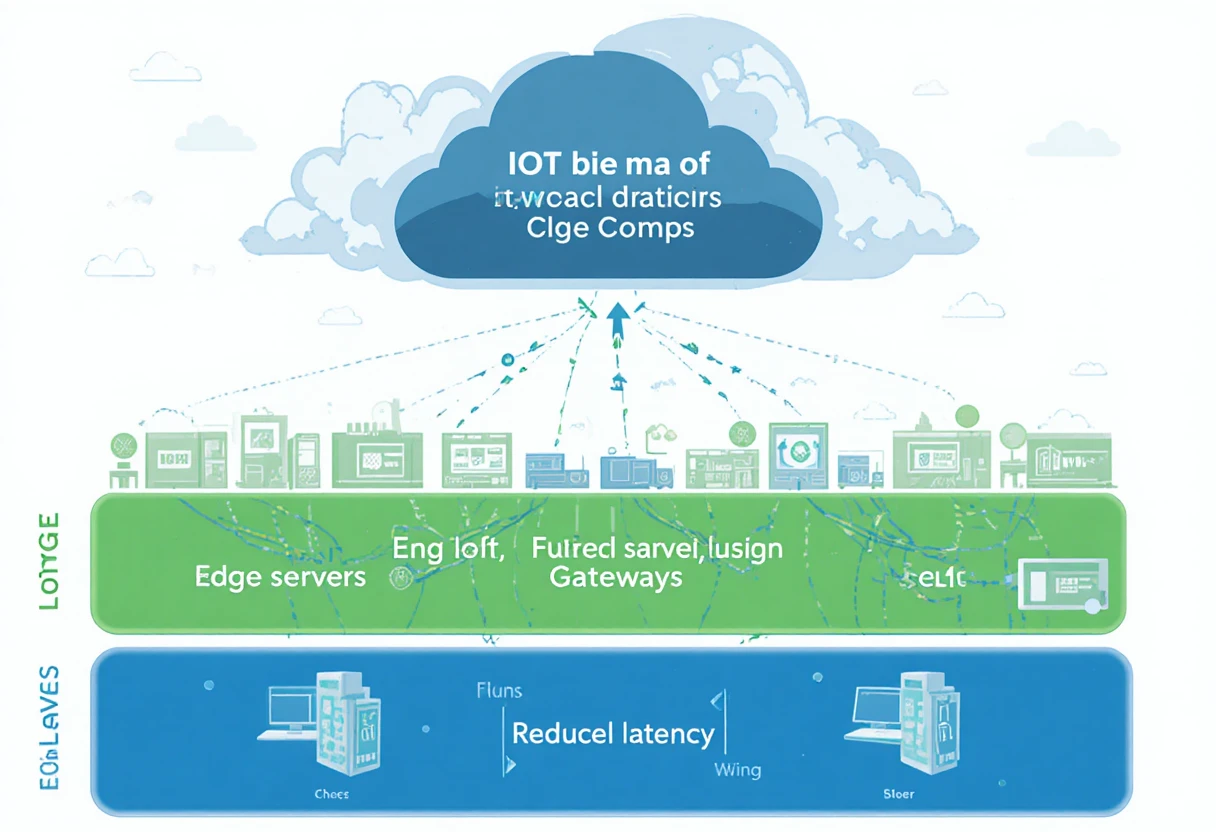

The architecture of edge computing is distributed, comprising multiple layers for efficient data handling. At the base is the device layer, including IoT endpoints that generate data. Above it, edge gateways aggregate and preprocess information, acting as intermediaries.

Edge servers form the core, running containerized applications for local analytics. These connect via a network layer to the cloud layer, which handles advanced tasks like AI training. Hybrid models, often called edge cloud, integrate seamlessly, using orchestration tools like Kubernetes for management.

Fog computing serves as an intermediate layer, extending edge capabilities for temporary storage and processing when devices lack power. This tiered structure ensures scalability, with software-defined networking enabling dynamic resource allocation.

Best practices include containerization for portability and automation for monitoring, addressing the complexity of distributed systems.

Benefits of Edge Computing

Edge computing offers transformative advantages across operational, economic, and security dimensions. Foremost is latency reduction, enabling near-real-time responses critical for applications like telesurgery or autonomous driving. By processing locally, it slashes response times to single-digit milliseconds.

Bandwidth efficiency is another key benefit; only filtered data travels to the cloud, reducing costs by up to 30% and alleviating network congestion. This is vital in remote areas with limited connectivity.

Security enhancements come from keeping sensitive data local, minimizing exposure during transmission and aiding compliance with regulations like GDPR. Reliability improves, as systems operate offline during outages.

Additionally, it supports AI at the edge, enabling predictive analytics without cloud dependency. Overall, edge computing boosts efficiency, cuts costs, and fosters innovation.

Challenges and Disadvantages of Edge Computing

Despite its strengths, edge computing presents notable challenges. Management complexity arises from distributed devices, complicating updates and monitoring. With projections of 77 billion edge-enabled IoT devices by 2030, scalability becomes daunting.

Security risks escalate; each device expands the attack surface, vulnerable to DDoS or spoofing. Privacy concerns loom, as localized data could enable unauthorized tracking.

Resource limitations on edge devices restrict complex computations, necessitating hybrid approaches. Infrastructure costs for deployment in harsh environments, like powering roadside servers, add burdens.

Connectivity issues affect 10-15% of locations, causing bottlenecks. Addressing these requires robust security protocols and advanced management tools.

Applications of Edge Computing

Edge computing’s versatility shines in diverse industries, enabling real-time innovations.

In Manufacturing

In smart factories, edge processes sensor data for predictive maintenance, reducing downtime by 30%. It enables automated quality control and safety monitoring.

In Healthcare

Wearables and monitors analyze patient vitals on-site, facilitating remote diagnostics and instant alerts, with the market reaching $12.9 billion by 2028.

In Transportation

Autonomous vehicles rely on edge for processing environmental data, enhancing safety. Fleet management optimizes routes in real-time.

In Retail and Smart Cities

Retail uses edge for personalized experiences and inventory tracking. Smart cities adjust traffic and energy grids dynamically.

Other applications include agriculture for precision farming and finance for fraud detection.

Edge Computing in 2025: Statistics and Current Trends

In 2025, edge computing is booming, with global spending reaching $228 billion in 2024 and projected to hit $378 billion by 2028. IoT devices number 18.8 billion, generating data at a 34% CAGR.

Trends include AI integration for edge inference, 5G synergy for ultra-low latency, and sustainability focus, with analog chips reducing power by 90%. Security trends emphasize early threat mitigation, as 47% cite cybersecurity as a barrier.

Adoption spans automotive, healthcare, and retail, with 75% of CIOs boosting AI budgets.

Future of Edge Computing

Looking ahead, edge computing will evolve with 6G, enabling sub-millisecond latency by 2030. Trends include multi-modal AI, digital twins for simulations, and service meshes for distributed architectures.

The market is forecasted to reach $702.8 billion by 2033, driven by IoT growth to 40 billion industrial devices. Sustainability and ethical AI will shape regulations, with edge enabling autonomous operations in space and remote locales.

Integration with agentic AI promises proactive systems, reshaping industries.

Conclusion

Edge computing stands as a pivotal technology in 2025, bridging the gap between data generation and actionable insights. From its historical roots in CDNs to current dominance in real-time applications, it offers unparalleled benefits in speed, efficiency, and security. While challenges like management and security persist, ongoing innovations mitigate them. As data volumes explode, edge computing will continue to drive digital transformation, fostering a more responsive and intelligent world.